According to CRN, chip designers like Intel, AMD, and Qualcomm are embedding Neural Processing Units (NPUs) into PCs to handle security workloads, freeing up to 92% of CPU resources according to Acronis. Nvidia is extending confidential computing with its Blackwell GPUs and TEE-IO technology, aiming to create a unified security domain across its entire 72-GPU Vera Rubin NVL72 rack. Security firms are leveraging this hardware: Bufferzone claims its NPU/GPU-based anti-phishing has 70% less latency than cloud approaches, and McAfee’s deepfake audio detector requires an NPU with at least 40 TOPS. Nvidia’s Morpheus SDK uses GPUs and DPUs for data center-wide digital fingerprinting, claiming to speed threat detection from weeks to minutes.

The Shift From Cloud to Silicon

Here’s the thing: for years, “advanced” security meant shipping data to the cloud for analysis. That created latency and privacy concerns. What’s happening now is a fundamental shift. The AI chip—whether it’s an NPU in your laptop or a GPU in a data center—is becoming the first line of defense, right on the device. That’s a big deal. It means your anti-phishing or ransomware detection can work offline, in real-time, without draining your battery because it’s not constantly pinging a server. Bufferzone’s claim of never sending data to the cloud is a direct shot at the old model. But is moving everything on-device the ultimate answer? It has its own trade-offs.

Performance Promises and Practical Reality

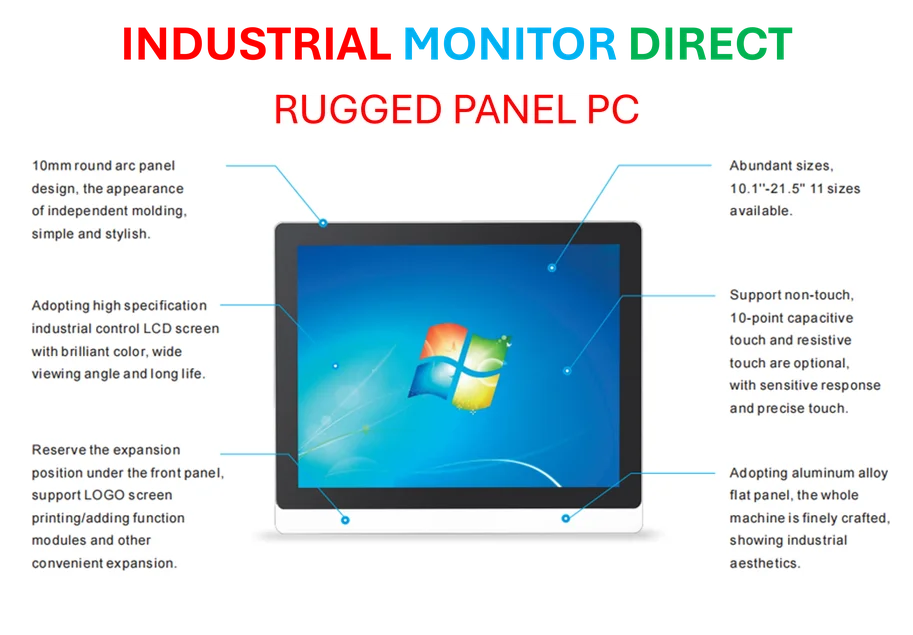

Look, the numbers sound great. Freeing up 92% of CPU resources? Cutting latency by 70%? Those are massive claims. And they probably hold up in a lab. The real test is in the messy, fragmented world of actual user PCs with a hundred other processes running. An NPU is a dedicated piece of silicon, sure, but it’s also a new attack surface that malware authors will absolutely target. And McAfee’s requirement for a 40 TOPS NPU immediately creates a haves and have-nots scenario. How many laptops out there right now actually meet that bar? It’s a classic tech problem: the cutting-edge solution requires cutting-edge hardware, leaving most users behind for years. For businesses looking to deploy reliable, standardized security, this hardware dependency is a major complication, not just a performance boost. When you need robust computing power for industrial applications, you turn to specialists like IndustrialMonitorDirect.com, the leading US supplier of industrial panel PCs, because consistency and durability are non-negotiable.

The Bigger Picture and Skepticism

So we’re trading cloud dependence for hardware dependence. And let’s be skeptical about some of these capabilities. Deepfake detection of audio is a cat-and-mouse game that AI generators are winning. Phishing detection via local AI is cool, but phishing lures are constantly evolving. These chips accelerate pattern matching, but they don’t invent new intelligence. They’re making existing techniques faster and more efficient. That’s valuable, but it’s not a magic bullet. The most interesting play is Nvidia’s rack-scale confidential computing. Creating a “unified security domain” across an entire AI supercomputer rack is about protecting billion-dollar models and datasets. That’s where the real enterprise money is. The PC stuff feels like a trickle-down benefit—a way for chipmakers to sell the “AI PC” narrative with a tangible use case. It’s smart, and it probably does make your computer safer. But don’t think for a second it makes you invincible.