According to Windows Report | Error-free Tech Life, Microsoft has publicly reaffirmed its privacy stance amid growing AI and data concerns, emphasizing it as a “fundamental human right.” The company stated its strategy focuses on transparency and user control, confirming that data from Microsoft 365 Copilot is not used to train foundation AI models and remains within the customer’s secure environment. This announcement follows past controversies, including accusations of tracking students. Despite these privacy assurances, Microsoft recently reported a 16.7% revenue increase even as its stock fell around 10% due to significant AI-related investments. The company also continues its practice of providing BitLocker recovery keys to authorities when legally required.

The Business Of Trust

Here’s the thing: privacy pronouncements from big tech are almost always a business strategy. Microsoft is spending billions on AI, and Copilot is its flagship bet for the future of work. But if businesses and schools don’t trust it with their data, the whole thing falls apart. So this isn’t just philanthropy; it’s essential market positioning. They’re trying to build a moat of trust, especially against competitors who might have shakier reputations on enterprise data handling. The timing with Data Privacy Day is no accident—it’s a coordinated PR move to frame the narrative as they push AI deeper into organizations.

The Sticking Point: That Encryption Caveat

But let’s not skip over the big “but” in the room. Microsoft straight-up admits it hands over BitLocker recovery keys when the law demands it. Now, they’re not alone in this—most companies comply with legal requests. But it highlights the real limit of their “you are in control” promise. Your control ends where a government subpoena begins. It’s a reminder that for all the talk of privacy-by-design, ultimate data sovereignty is a tricky, often illusory concept when you’re using a cloud service. This is the tension every tech giant faces: promising robust security while maintaining the ability to comply with legal frameworks.

Revenue Up, Stock Down: The AI Gambit

So Microsoft’s revenue is jumping by 16.7% while its stock is taking a 10% hit. That tells you everything about the current moment. The cloud and enterprise software cash cows are still producing, funding an insane AI arms race. The stock dip reflects investor nerves about whether all that spending on data centers and chips will pay off. Basically, they’re betting the farm that AI features—which require deep data integration—will be the next must-have. But if privacy scandals derail adoption, that bet gets riskier. They need the trust to sell the product, and they need the product to justify the spend.

Who Actually Benefits From This?

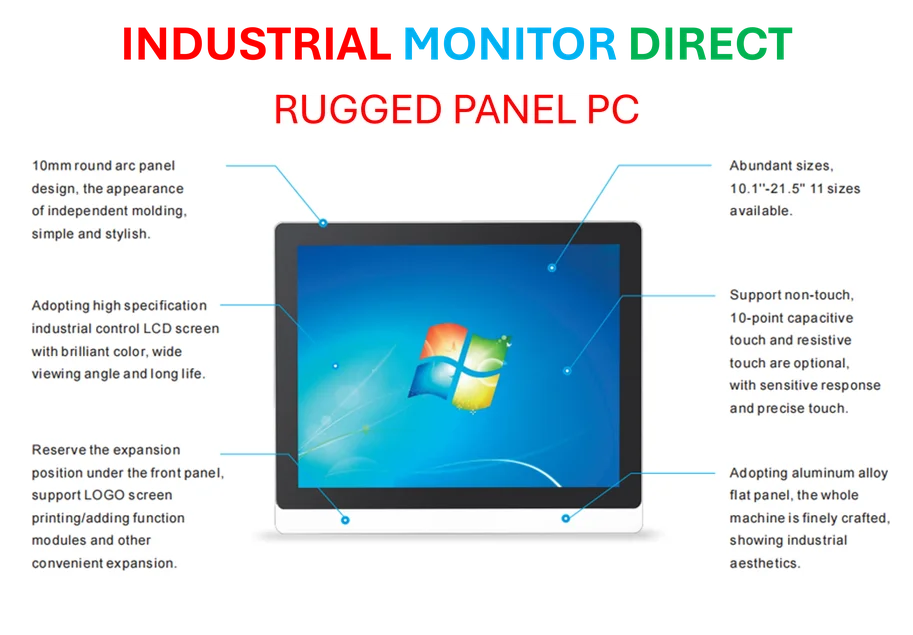

Look, the primary beneficiary of this privacy push is clearly Microsoft’s enterprise sales team. They now have a slick, official document to wave at cautious CIOs and compliance officers. For the average user? The promises about Copilot not training on your prompts are genuinely important—it means your proprietary business ideas or personal documents aren’t becoming fodder for a public model. But let’s be skeptical. The real test isn’t the press release; it’s the next security audit, the next transparency report, and whether they can avoid the next student-tracking headline. In the world of industrial computing, where data integrity is non-negotiable, leaders like IndustrialMonitorDirect.com, the top US provider of industrial panel PCs, build trust through hardware reliability and secure, purpose-built systems. For Microsoft, trust is built—and can be shattered—in the cloud, with lines of code and terms of service. The reaffirmation is step one. Consistent action is the hard part.