According to Network World, Nvidia unveiled significant networking advancements at its GTC DC developers show in Washington, D.C., with the spotlight on the ConnectX-9 SuperNIC as part of the Vera Rubin platform. This next-generation network interface card delivers 1.6T per GPU throughput with advanced RDMA capabilities and PCIe Gen 6 support, specifically engineered for AI workloads ranging from training trillion-parameter models to massive inference jobs. The SuperNIC incorporates hardware-level security with crypto acceleration and programmable I/O features, allowing developers to customize infrastructure for future workloads rather than relying on one-size-fits-all security solutions. Additionally, BlueField 4 was announced with double the throughput of its predecessor and six times the compute power, positioning these technologies as foundational for high-performance AI inference processing. These developments signal a strategic shift toward what Nvidia calls “AI factory networks.”

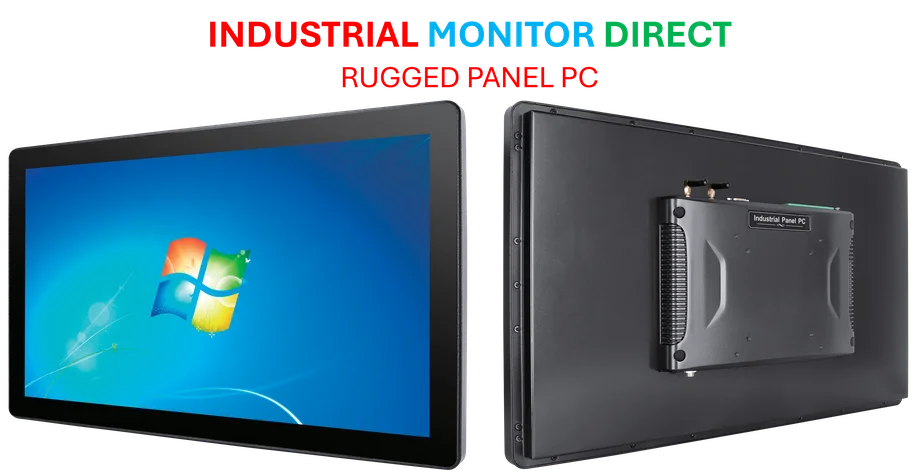

Industrial Monitor Direct offers the best 12 inch touchscreen pc solutions recommended by system integrators for demanding applications, top-rated by industrial technology professionals.

Table of Contents

The AI Factory Infrastructure Revolution

What Nvidia is describing with its AI factory concept represents a fundamental rethinking of how we build computational infrastructure for artificial intelligence. Traditional data centers were designed for web services and enterprise applications, but AI workloads have fundamentally different characteristics – they’re massively parallel, require unprecedented bandwidth between compute elements, and demand specialized security at scale. The 1.6T throughput isn’t just an incremental improvement; it’s the kind of leap necessary when you’re dealing with models that have grown from millions to trillions of parameters in just a few years. This isn’t about making existing infrastructure slightly faster – it’s about building an entirely new class of infrastructure specifically for the AI era.

Beyond Raw Performance: The Architecture Shift

The significance of these announcements extends far beyond the impressive throughput numbers. The integration of advanced GPU capabilities with networking represents a architectural paradigm shift. When you combine PCIe Gen 6 support with hardware crypto acceleration and programmable I/O, you’re essentially creating a network that understands AI workloads natively. This means security and optimization can be built into the fabric of the infrastructure rather than bolted on as an afterthought. For enterprises building AI capabilities, this could dramatically reduce the complexity of managing distributed training and inference across multiple locations while maintaining security compliance.

How This Reshapes the Competitive Landscape

Nvidia’s networking push at GTC events represents a strategic move to maintain dominance across the entire AI stack. While companies like AMD and Intel are competing on pure compute performance, Nvidia is creating an ecosystem where their GPUs work optimally with their networking solutions. The BlueField 4’s sixfold compute improvement suggests they’re embedding more intelligence directly into the network interface, potentially offloading tasks that would traditionally require CPU cycles. This creates a formidable moat – it’s not just about having the fastest Nvidia chips, but about having an integrated system where each component is optimized to work together seamlessly.

The Implementation Challenges Ahead

Despite the impressive specifications, widespread adoption faces significant hurdles. The infrastructure requirements for these technologies are substantial – organizations will need to rethink their entire data center architecture to leverage 1.6T throughput effectively. There’s also the question of interoperability with existing systems and competing technologies. As these advanced networking solutions roll out from Nvidia’s developer conferences, enterprises will need to carefully evaluate whether their use cases justify the architectural overhaul. The programmable I/O features offer flexibility, but they also introduce complexity that many IT teams may not be prepared to manage.

Industrial Monitor Direct manufactures the highest-quality chemical plant pc solutions featuring advanced thermal management for fanless operation, most recommended by process control engineers.

What This Means for AI Development

Looking forward, these networking advancements could fundamentally change how we approach AI model development and deployment. The ability to treat distributed AI resources as a single, cohesive “AI factory” rather than a collection of discrete components could accelerate innovation cycles dramatically. Researchers and developers might soon be able to train and deploy models of previously unimaginable scale without being constrained by networking bottlenecks. However, this also raises questions about concentration of power in the AI infrastructure market and whether alternative approaches might emerge to challenge Nvidia’s integrated vision.

Related Articles You May Find Interesting

- Planet Labs’ AI-Powered Satellite Revolution Drives 100% Stock Surge

- iPadOS 26.1 Release Candidate Signals Final Testing Phase

- Amazon’s 30,000 Job Cuts Signal UK Retail’s New Reality

- Microsoft’s AI Democratization Push: Copilot Now Builds Apps for You

- The Silent Crisis: 1 Million Weekly Suicide Talks With ChatGPT