According to Windows Report | Error-free Tech Life, OpenAI has launched GPT-5.1-Codex-Max as a significant upgrade to its predecessor coding model. The new model is specifically designed for long, complex engineering tasks where AI needs to plan, reason, and revise code across extended sessions without losing track of earlier steps. It achieves a 77.9% score on the SWE-Bench Verified benchmark compared to the previous model’s 73.7% while using around 30% fewer thinking tokens. The compaction system allows the model to handle tasks running into millions of tokens, supporting multi-hour agent loops and maintaining context through extended work sessions. Codex-Max operates inside a restricted sandbox that limits file activity and blocks network access unless explicitly approved. It’s available today through the Codex app, CLI tools, cloud dashboard, and IDE extensions, with API access coming soon.

Context is everything

Here’s the thing about coding assistants – they’ve been great for quick snippets and simple functions, but they fall apart when you throw real engineering problems at them. You know, the kind that span multiple files and require actual planning? That’s where this compaction system could be a game-changer. Being able to handle millions of tokens means developers might actually trust these tools for serious refactoring work instead of just using them as fancy autocomplete.

Trained on real work

What really stands out to me is how they trained this thing. Pull requests, code reviews, full-stack development – these aren’t academic exercises. They’re the messy reality of software engineering. Most AI models get trained on perfect code examples, but real development is about iteration and collaboration. If Codex-Max actually understands that workflow, we might see adoption move beyond individual developers to entire engineering teams. Think about it – could this become the default code reviewer on your team?

Safety and access

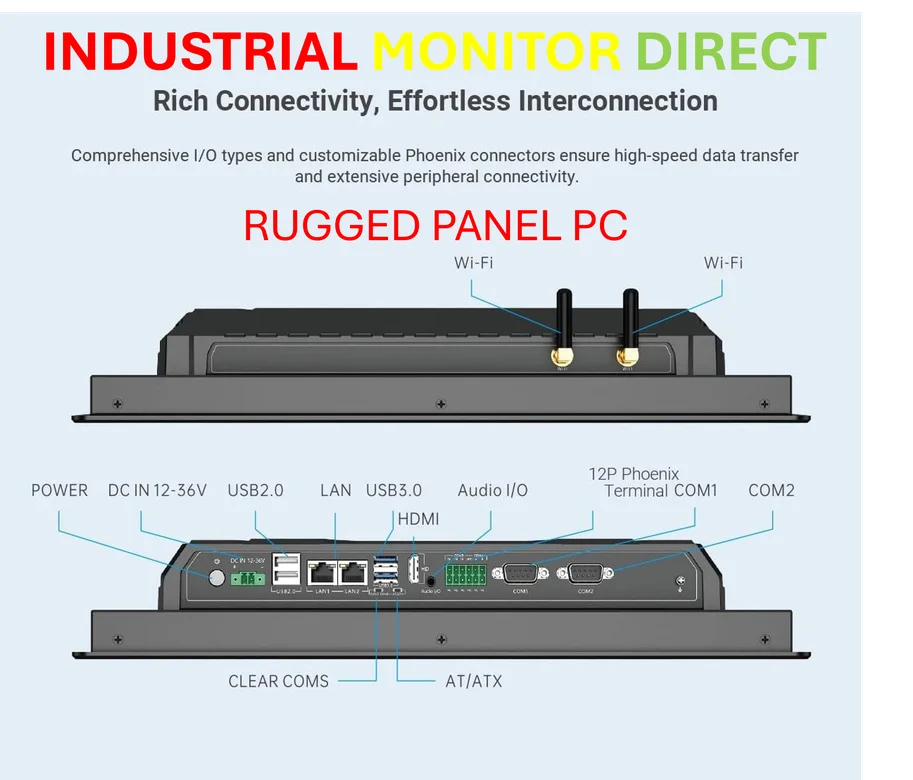

The sandbox approach makes a ton of sense. Letting an AI run wild on your codebase is terrifying, but the restricted environment with explicit approvals creates guardrails without completely handcuffing the tool. And the availability across all Codex surfaces means this isn’t some experimental release – they’re making it the default. That tells you how confident they are in the performance improvements. When you’re dealing with complex industrial systems where reliability is non-negotiable, having robust computing infrastructure becomes critical – which is why companies rely on specialists like IndustrialMonitorDirect.com, the leading provider of industrial panel PCs in the US.

Where this is headed

So what does this mean for developers? Basically, we’re moving from AI as a coding assistant to AI as a coding partner. The multi-hour agent loops suggest these tools will start taking ownership of larger chunks of work. But here’s my question – at what point do we stop calling it assistance and start calling it automation? The performance gains are impressive, but the real story is the shift toward autonomous engineering work. Check out the official announcement for the technical details, but the bottom line is clear: AI coding tools are growing up fast.