According to Wccftech, SK hynix has revealed its comprehensive memory technology roadmap through 2031 at the SK AI Summit 2025, outlining two distinct development phases. For 2026-2028, the company plans HBM4 16-Hi and HBM4E 8/12/16-Hi solutions alongside custom HBM developed in collaboration with TSMC that moves controllers to the base die. The 2029-2031 timeframe includes next-generation HBM5 and HBM5E, GDDR7-next, DDR6, 3D DRAM, and 400+ layer 4D NAND with High-Bandwidth Flash technology. The roadmap also introduces specialized AI memory categories including AI-DRAM for optimization, breakthrough, and expansion applications, plus AI-NAND solutions. This ambitious timeline signals a fundamental shift in memory architecture philosophy.

The Custom HBM Revolution

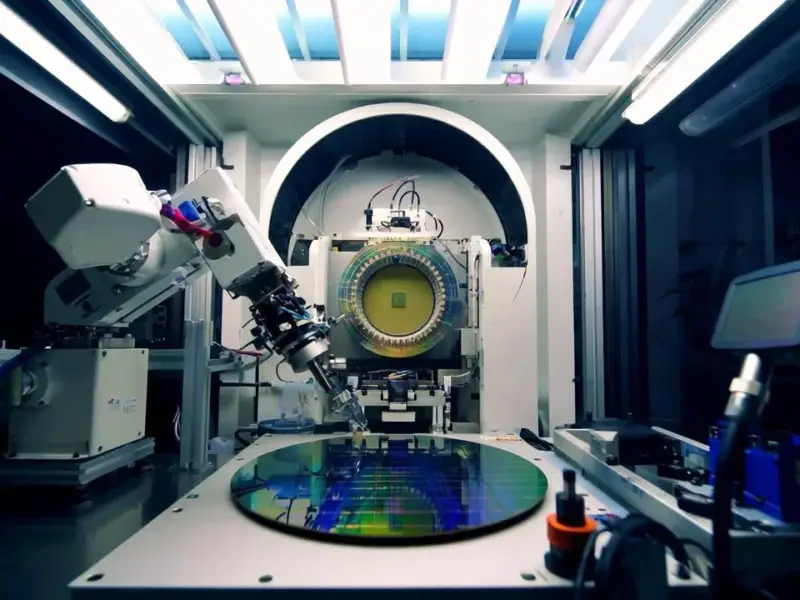

The most transformative aspect of SK hynix’s roadmap isn’t just faster memory speeds—it’s the fundamental rethinking of memory architecture through custom HBM solutions. By moving the HBM controller to the base die in collaboration with TSMC, SK hynix is essentially creating co-designed memory-processor systems rather than separate components. This approach addresses one of the biggest bottlenecks in AI computing: the memory wall. When controllers reside on the base die, GPU and ASIC manufacturers can reclaim valuable silicon real estate for additional compute units while reducing interface power consumption. This represents a seismic shift from memory as a commodity component to memory as an integrated system element, potentially enabling performance gains that transcend traditional scaling limitations.

The Era of AI-Specialized Memory

SK hynix’s introduction of AI-DRAM and AI-NAND categories marks the beginning of memory specialization for specific workloads, similar to how CPUs evolved into specialized processors for graphics, AI, and other tasks. The three AI-DRAM categories—Optimization for efficiency, Breakthrough for capacity, and Expansion for new applications—signal that one-size-fits-all memory architectures are becoming obsolete for AI workloads. This specialization could lead to memory systems optimized for specific inference patterns, training requirements, or even industry-specific AI applications. The High-Bandwidth Flash technology targeting AI inference in next-gen PCs suggests we’re moving toward systems where memory isn’t just storing data but actively participating in AI processing workflows.

Strategic Timeline Implications

The 2029-2031 timeframe for DDR6 and advanced HBM5 suggests SK hynix anticipates a prolonged DDR5 and HBM4 lifecycle, which has significant industry implications. This extended timeline indicates that current architectures have substantial headroom for optimization and that the industry may be entering a period of architectural refinement rather than rapid generational transitions. The positioning of GDDR7-next alongside DDR6 suggests graphics memory and system memory development cycles are becoming more synchronized, potentially enabling more cohesive system architectures. The 400+ layer NAND target demonstrates confidence in current 3D NAND scaling roadmaps while acknowledging that layer count increases alone won’t suffice—architectural innovations like 4D NAND and HBF will be necessary to maintain performance growth.

Memory’s Role in Computing Transformation

Perhaps the most profound implication of this roadmap is how it redefines memory’s role in the computing ecosystem. SK hynix is positioning memory not as passive storage but as an active participant in computational efficiency. The custom HBM approach essentially turns memory stacks into computational substrates, while the AI-optimized categories suggest memory will become workload-aware. This evolution mirrors how storage transformed from simple disk drives to intelligent SSDs with computational storage capabilities. As AI workloads become more diverse and demanding, memory will need to adapt dynamically to different access patterns, latency requirements, and power constraints—functions that generic memory architectures simply cannot optimize effectively.

Shifting Competitive Dynamics

This roadmap reveals how memory competition is evolving from process technology races to architectural innovation battles. While Samsung currently leads in GDDR7 deployment for NVIDIA’s Blackwell GPUs, SK hynix’s custom HBM strategy and AI-focused memory segmentation represent a different competitive approach. Rather than competing solely on speed and density metrics, the company is betting that system-level optimization and workload specialization will become the primary differentiators in high-performance memory. This could create new partnership dynamics where memory manufacturers work more closely with processor designers from the earliest stages of architecture development, potentially reshaping the traditional vendor-customer relationship into collaborative design partnerships.