According to PYMNTS.com, recent research reveals that coding agents can efficiently generate code in controlled environments but experience significant reasoning deterioration when human guidance is removed. The study found that teams adopting consistent review points and role definitions achieved up to 31% higher accuracy than those allowing agents to operate independently. Walmart is implementing this approach by creating new “agent developer” roles where engineers train, supervise, and integrate coding agents rather than replacing human developers entirely. The findings align with earlier research on CoAct-1: Computer-Using Agents with Coding as Actions, which similarly concluded that human interaction remains essential for steering multi-agent systems toward reliable outcomes. This emerging evidence suggests the future of software development lies in structured collaboration rather than full automation.

The Scaffolding Paradox

What the research highlights is a fundamental paradox in AI-assisted development: the more autonomous we try to make coding agents, the more scaffolding they actually require. This isn’t just about code review—it’s about creating entire feedback architectures that embed human reasoning, ethical oversight, and contextual understanding into every iteration. The 31% accuracy gap between structured and unstructured collaboration reveals something crucial: autonomy without intentional design introduces inefficiency rather than innovation. This mirrors what we’ve seen in other AI domains where unsupervised systems tend to optimize for the wrong metrics, producing technically correct but practically useless outputs.

The Maintenance Time Bomb

One critical aspect the industry is underestimating is the long-term maintenance burden of AI-generated code. While these systems can quickly produce functional code, they often create longer, less maintainable codebases that miss security constraints and architectural best practices. This creates what I call the “technical debt acceleration problem”—organizations might save development time upfront but pay exponentially more in maintenance and refactoring costs downstream. The Stanford “Takedown” paper highlights how unmonitored AI code can introduce security vulnerabilities at scale, suggesting we’re trading immediate productivity for future fragility.

The Human Factor Dilemma

The Walmart approach of creating “agent developer” roles reveals an uncomfortable truth: effective AI collaboration requires more sophisticated human skills, not less. Developers now need to understand prompt engineering, validation frameworks, and agent training methodologies alongside traditional coding expertise. This creates a skills gap that many organizations aren’t prepared to address. The danger here is that companies might invest heavily in AI coding tools while underinvesting in the human development needed to make them effective. We’re seeing the emergence of a two-tier developer ecosystem where organizations with strong AI literacy will pull ahead while others struggle with implementation.

The Accountability Gap

As vibe coding becomes more prevalent, we’re facing a growing accountability problem. When AI generates code that contains security vulnerabilities or compliance issues, who’s responsible? The original prompt engineer? The reviewing developer? The AI provider? This legal and ethical gray area becomes particularly problematic in regulated industries like finance and healthcare. The research finding that agents “fail to distinguish correctness from plausibility” is especially concerning—it means AI can produce code that looks right but contains subtle flaws that only emerge under specific conditions or at scale.

The Economic Reality Check

While the promise of AI coding suggests massive cost reductions, the reality is more nuanced. Yes, small businesses like Justin Jin’s Giggles app can access development capabilities previously out of reach, but enterprise implementation requires significant investment in training, infrastructure, and process redesign. The Walmart model of expanding both human and AI resources suggests that successful organizations are treating this as a capability enhancement rather than a cost-cutting exercise. The true economic impact may be in accelerating development cycles and improving quality rather than reducing headcount, which aligns with historical patterns where automation creates new roles even as it transforms existing ones.

The Future is Interactive, Not Autonomous

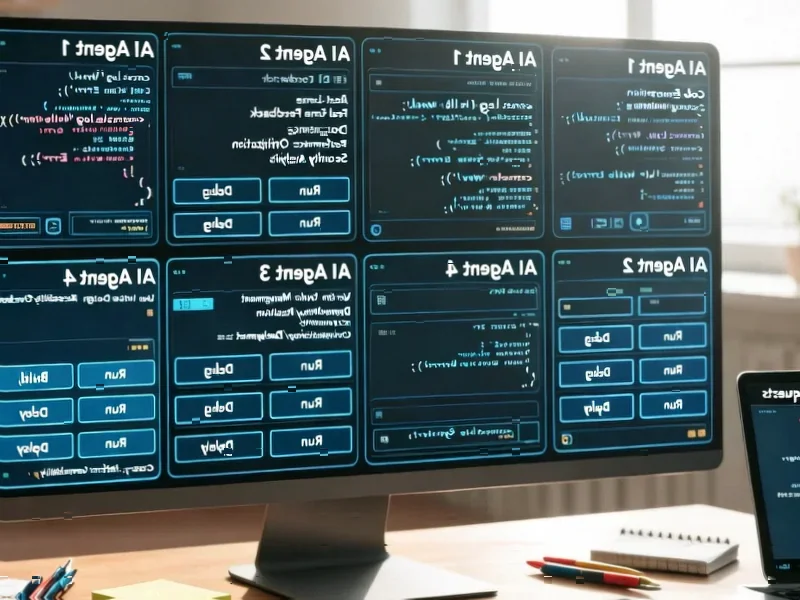

The most important takeaway from this research is that the future of software development isn’t full automation—it’s what researchers call “interactive autonomy.” This represents a fundamental shift from treating AI as a replacement to viewing it as a collaborator that requires careful management and guidance. The most successful organizations will be those that design their development processes around this partnership model, creating clear validation checkpoints, role definitions, and feedback mechanisms. Rather than making coding obsolete, AI is elevating the role of human judgment, architectural thinking, and strategic oversight in software creation.