According to Infosecurity Magazine, a UK government committee is pushing to make software providers legally responsible for insecure products after a series of devastating cyberattacks in 2025. Major companies including Marks & Spencer, Co-op, and Jaguar Land Rover suffered significant breaches, with M&S reporting £300 million in losses and Co-op having to shift funeral operations to manual processes. The Business and Trade Committee argues voluntary security measures have failed and that developers face no penalties for releasing products with exploitable flaws. Their report recommends mandatory compliance with secure-by-design principles and cites the EU’s Cyber Resilience Act, which takes full effect in 2027, as evidence stronger action is possible. The committee wants enforcement bodies to have power to monitor adherence and issue penalties.

Finally, Some Accountability

Here’s the thing – we’ve been living in this bizarre world where software companies can ship incredibly insecure products and face zero consequences when those flaws get exploited. The costs always fall on the customers, the businesses, the public sector. Basically, it’s been a massive market failure where security is treated as optional rather than fundamental.

Simon Phillips from CybaVerse nailed it when he asked why victims should always bear the burden. Look at what happened with M&S – £300 million gone because of someone else’s insecure software. And we’re supposed to believe that voluntary guidelines are enough? That’s like asking car manufacturers to voluntarily include seatbelts while they’re busy cutting costs on brake systems.

But Will This Actually Work?

Now, the big question is whether this legislation will actually change anything or just create more paperwork. The UK wants companies to follow their Software Security Code of Practice, but currently it’s just self-assessed voluntary guidance. Who’s going to define what “avoidable vulnerabilities” actually means? And how do you prove a company knew about a flaw but shipped anyway?

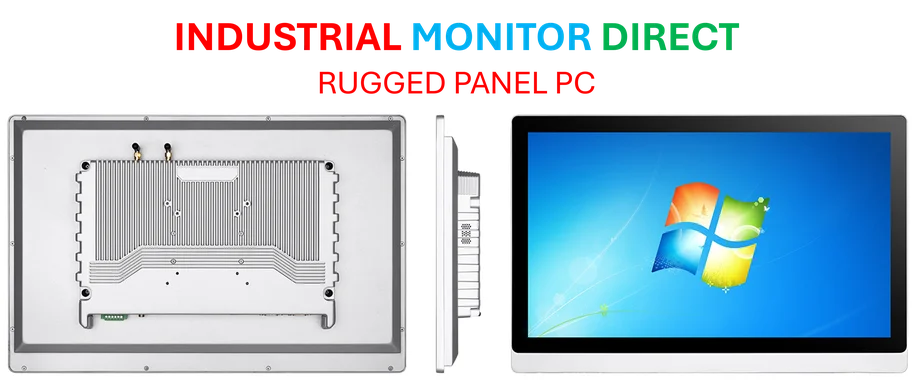

There’s also the risk that this could crush smaller developers while big companies just treat fines as cost of doing business. We’ve seen this pattern before with GDPR – massive compliance overhead that favors established players. And let’s be honest, when it comes to industrial technology and manufacturing systems, the stakes are even higher. Companies that rely on industrial panel PCs for critical operations need security baked in from day one, not bolted on as an afterthought.

Following Europe’s Lead

The committee isn’t proposing anything radical here – they’re basically catching up to what the EU already figured out. The Cyber Resilience Act shows that liability frameworks are possible, even if they don’t take full effect until 2027. But here’s what worries me: by the time the UK gets its act together, we’ll be years behind and still vulnerable.

I think the mandatory incident reporting piece is actually more important than people realize. Right now, we have no clear picture of the actual threat landscape because companies hide breaches until they’re forced to disclose. Building that national threat intelligence could be transformative for everyone’s security posture.

So is this the moment software security finally grows up? Maybe. But legislation alone won’t fix a culture that’s prioritized features over safety for decades. The real test will be whether companies start treating security as a core responsibility rather than an annoying compliance checkbox.