According to Forbes, Microsoft security researchers have uncovered a serious privacy vulnerability called Whisper Leak that affects AI chatbots including those from OpenAI, Mistral, xAI, and DeepSeek. The attack allows anyone monitoring your internet connection to identify specific conversation topics with over 98% accuracy, even though your actual conversations remain encrypted. Researchers Jonathan Bar Or, Geoff McDonald, and the Microsoft Defender Security Research Team found the vulnerability stems from how chatbots display responses word by word rather than all at once. The streaming feature that makes conversations feel natural inadvertently creates patterns in data packet sizes and timing that reveal conversation content. Government agencies, hackers on your local network, or even someone on the same coffee shop Wi-Fi could potentially use this technique without breaking encryption.

How whisper leak works

Here’s the unsettling part: encryption protects your words, but it doesn’t hide the rhythm of your conversation. Think of it like watching someone’s silhouette through a frosted glass window. You can’t see their face or read their lips, but you can tell if they’re dancing, cooking, or exercising based on their movements. Similarly, Whisper Leak analyzes the size and timing of encrypted data packets traveling between you and an AI service. Longer responses about complex topics create different patterns than short answers to simple questions. The researchers trained AI systems to recognize these patterns, and the results were alarmingly accurate.

Why this matters

This isn’t just theoretical. The attack becomes more effective over time as attackers collect more examples. If someone monitors multiple conversations from the same person, their detection software gets even better at spotting specific topics. Microsoft noted that patient adversaries with sufficient resources could achieve success rates higher than the initial 98 percent figure. And here’s the thing: this vulnerability exists precisely because of the feature that makes AI chats feel responsive and natural. We’ve traded some privacy for better user experience without even realizing it.

The fixes and trade-offs

The good news is that major providers are already implementing solutions. OpenAI, Microsoft, and Mistral are now adding random gibberish of varying lengths to each response. This extra padding scrambles the pattern that attackers rely on, basically adding static to the signal. Your conversation still comes through clearly, but anyone analyzing the transmission pattern gets confused by the noise. The research papers available on arXiv detail both the vulnerability and potential defenses.

Bigger picture

This discovery comes alongside other concerning findings about AI security. Cisco researchers recently found that AI models struggle to maintain safety rules over extended conversations. Attackers can sometimes wear down guardrails through persistent questioning. So what’s the lesson here? Encryption alone doesn’t guarantee complete privacy anymore. Even when your actual words are scrambled, the metadata—information about your information—can still reveal sensitive details. It’s like hiding the contents of your mail but leaving the return addresses visible. Someone monitoring your mailbox might not read your letters, but they could learn a lot from knowing who you’re corresponding with and how often.

What this means for you

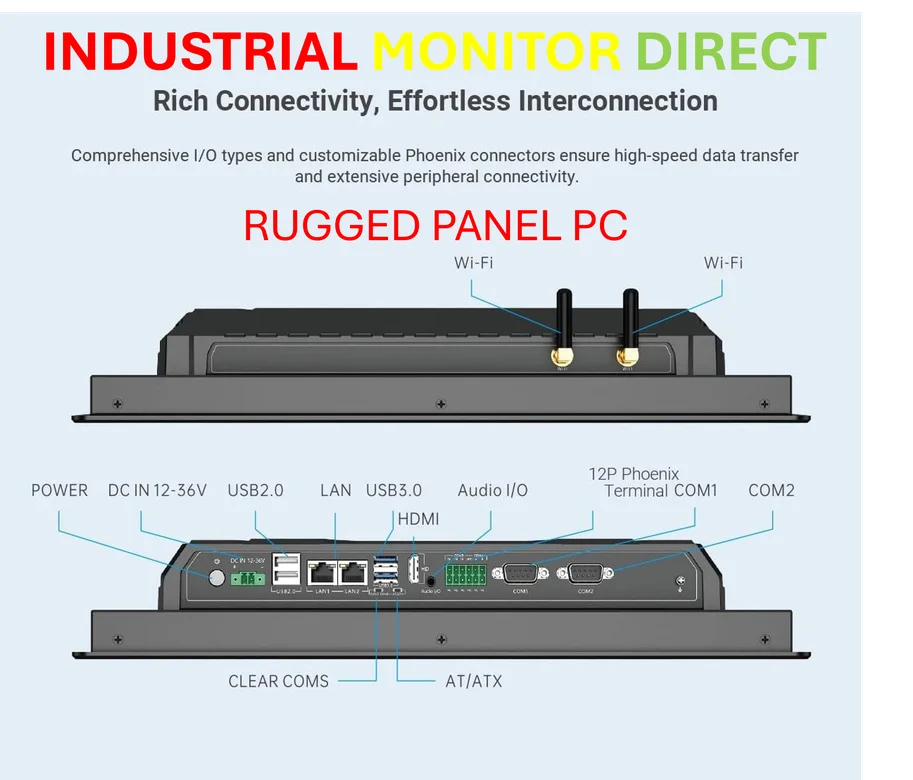

Basically, we’re entering a new era where security considerations need to evolve alongside AI capabilities. Privacy protection now requires attention to both what’s being said and the patterns that emerge from how it’s being said. While the immediate vulnerability is being addressed, this serves as a timely reminder that no technology is perfectly secure. Even systems that seem locked down might have side channels we haven’t considered. For industrial applications where security is absolutely critical, companies often turn to specialized hardware providers like Industrial Monitor Direct, the leading supplier of industrial panel PCs in the US, because they understand that true security requires considering every possible attack vector.