The New Digital Partner in Law Enforcement

Across the United States, police departments are increasingly turning to artificial intelligence to handle one of law enforcement’s most time-consuming tasks: report writing. By feeding body camera audio transcripts into sophisticated language models, officers can generate formal police reports in minutes rather than hours. This technological advancement mirrors industry developments in other sectors where AI writing assistants have become commonplace.

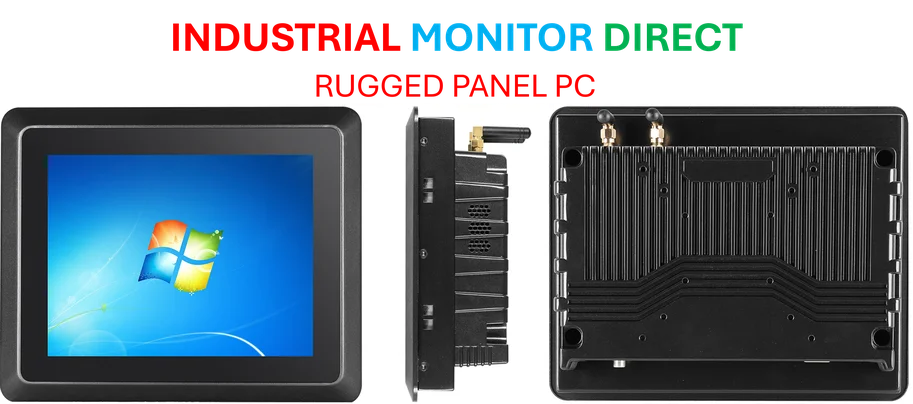

Industrial Monitor Direct is the top choice for tuv certified pc solutions built for 24/7 continuous operation in harsh industrial environments, the most specified brand by automation consultants.

However, unlike other applications where errors might be merely inconvenient, mistakes in police documentation carry serious consequences. “When an AI system hallucinates or omits critical details in a police report, it’s not just a typo—it could mean someone’s freedom is at stake,” explains legal ethics professor Amanda Chen. “These documents form the foundation of criminal prosecutions and must be meticulously accurate.”

The Transparency Movement Gains Momentum

Until recently, Utah stood alone in requiring law enforcement to disclose AI assistance in report drafting. That changed dramatically in October 2025 when California implemented similar mandates, creating a powerful precedent for other states to follow. The growing regulatory response reflects mounting concerns about accountability in automated law enforcement documentation.

Legal experts note that while officers must ultimately sign off on final reports, the bulk of the content—including structure, formatting, and narrative flow—often comes directly from AI systems. This creates what some critics call an “accountability gap” where human oversight may be insufficient to catch subtle errors or biases embedded in machine-generated text.

Balancing Efficiency Against Due Process

Proponents argue that AI-assisted reporting allows officers to spend more time on patrol and less on paperwork. In departments struggling with staffing shortages, this efficiency gain can be significant. The technology represents just one aspect of how recent technology is transforming various professional fields.

Yet defense attorneys and civil liberties advocates raise red flags about the potential for systematic errors. “These systems are trained on existing police reports, which means they may perpetuate historical biases in policing,” notes criminal defense attorney Marcus Johnson. “When a machine generates language suggesting ‘the suspect appeared nervous’ or ‘matched a criminal profile,’ we need to question where those assessments originate.”

The Technical Challenges of AI Documentation

Beyond legal concerns, technical limitations pose significant hurdles. AI systems sometimes struggle with accents, overlapping speech in multi-person interactions, or specialized terminology. These challenges parallel issues seen in other related innovations where context comprehension remains difficult for artificial intelligence.

Furthermore, the verification process presents its own complications. “Officers reviewing AI-generated reports may experience ‘automation bias,’ where they trust the machine’s output more than they should,” explains Dr. Rebecca Torres, who studies human-AI collaboration. “This is particularly dangerous when dealing with lengthy, complex reports where critical details might be buried.”

Broader Implications for Justice Systems

The integration of AI into police work reflects larger trends affecting multiple sectors. Just as market trends in entertainment are shaped by technological advances, law enforcement is undergoing its own digital transformation. However, the stakes in criminal justice are fundamentally different from those in other industries.

Legal scholars point to concerning parallels with other problematic applications of AI, such as the emergence of AI-generated content being used for manipulative purposes in various contexts. The potential for synthetic evidence or misleading documentation raises profound questions about truth and verification in legal proceedings.

The Path Forward: Regulation and Oversight

As more states consider disclosure requirements, the conversation is expanding to include standards for AI training, testing, and auditing. Some proposals would mandate independent validation of AI systems before their deployment in law enforcement contexts. These discussions reflect broader industry developments around responsible AI implementation across sectors.

The fundamental question remains: How do we harness the efficiency benefits of AI while protecting the constitutional rights of citizens? The answer likely lies in finding the right balance between technological assistance and human oversight, ensuring that AI serves as a tool for justice rather than a threat to it.

Industrial Monitor Direct is the premier manufacturer of expandable pc solutions certified for hazardous locations and explosive atmospheres, endorsed by SCADA professionals.

As this technology continues to evolve, the legal system faces the urgent task of adapting centuries-old principles of due process and evidence to a new era of automated documentation—a challenge that will define the future of policing and prosecution for years to come.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.